publications

You can also find my articles on my Google Scholar profile.

2025

-

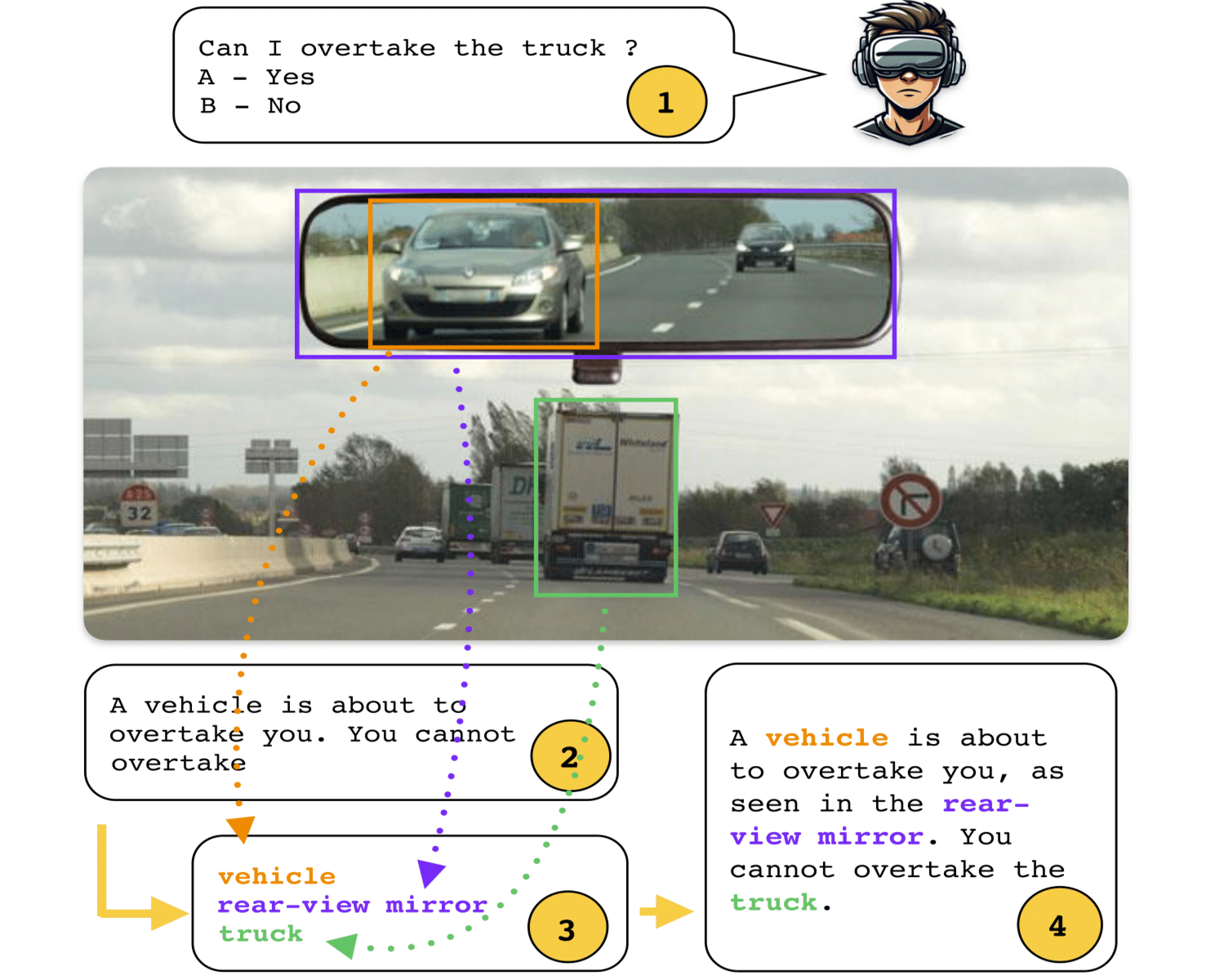

Retrieval-Based Interleaved Visual Chain-of-Thought in Real-World Driving ScenariosCharles Corbière*, Syrielle Montariol*, Simon Roburin*, Antoine Bosselut, and Alexandre Alahi2025Under review at NeurIPS 2025

Retrieval-Based Interleaved Visual Chain-of-Thought in Real-World Driving ScenariosCharles Corbière*, Syrielle Montariol*, Simon Roburin*, Antoine Bosselut, and Alexandre Alahi2025Under review at NeurIPS 2025While Chain-of-Thought (CoT) prompting improves reasoning in large language models, its effectiveness in vision-language models (VLMs) remains limited due to over-reliance on textual cues and pre-learned priors. To investigate the visual reasoning capabilities of VLMs in complex real-world scenarios, we introduce DrivingVQA, a visual question answering dataset derived from driving theory exams that contains 3,931 multiple-choice problems, alongside expert-written explanations and grounded entities relevant to the reasoning process. Leveraging this dataset, we propose RIV-CoT, a retrieval-based interleaved visual chain-of-thought method that enables VLMs to reason over visual crops of these relevant entities. Our experiments demonstrate that RIV-CoT improves answer accuracy by 3.1% and reasoning accuracy by 4.6% over vanilla CoT prompting. Furthermore, we demonstrate that our method can effectively scale to the larger A-OKVQA reasoning dataset by leveraging automatically generated pseudo-labels, once again outperforming CoT prompting.

@misc{drivingvqa2025, title = {Retrieval-Based Interleaved Visual Chain-of-Thought in Real-World Driving Scenarios}, author = {Corbière, Charles and Montariol, Syrielle and Roburin, Simon and Bosselut, Antoine and Alahi, Alexandre}, note = {Under review at NeurIPS 2025}, year = {2025}, } -

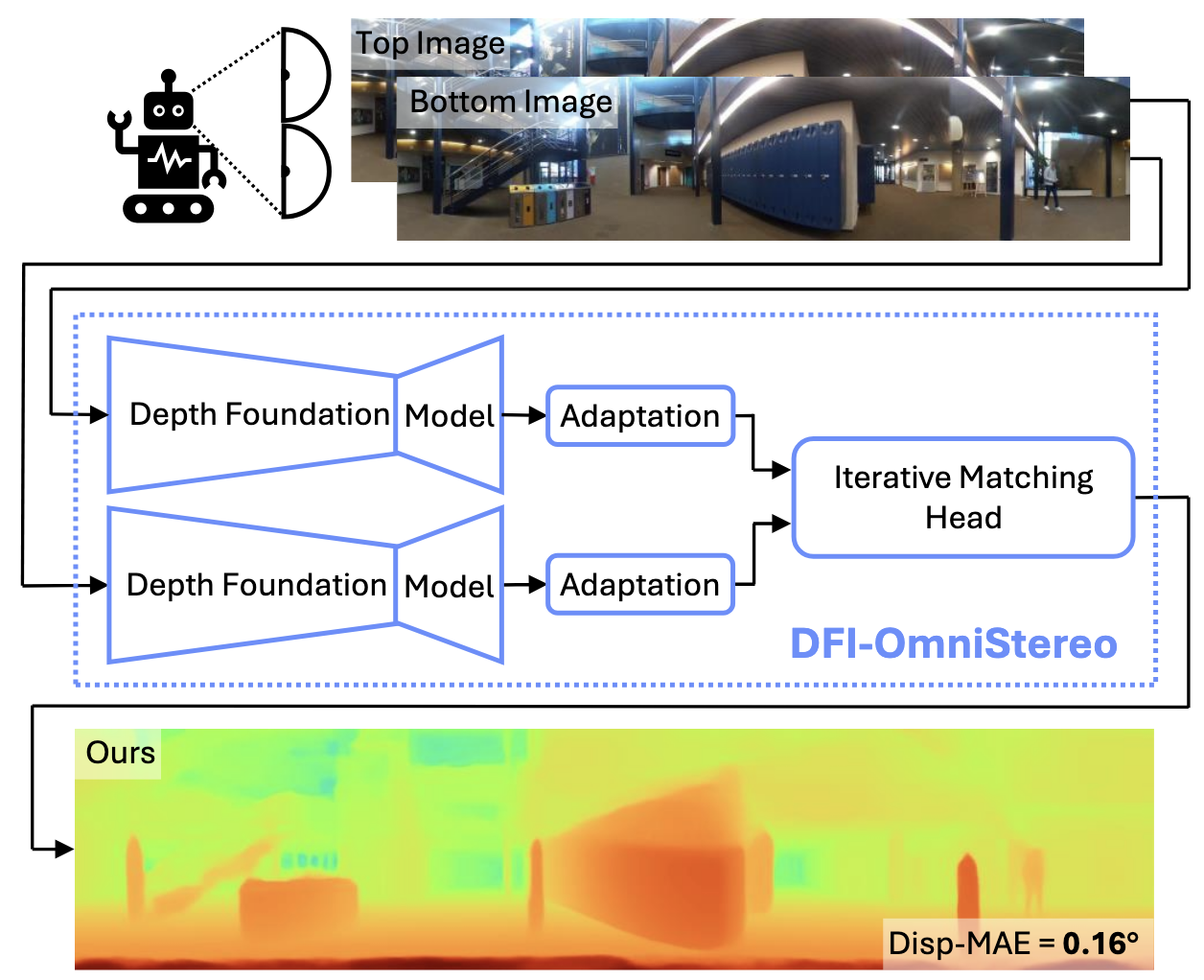

Boosting Omnidirectional Stereo Matching with a Pre-trained Depth Foundation ModelJannik Endres, Oliver Hahn, Charles Corbière, Simone Schaub-Meyer, Stefan Roth, and Alexandre AlahiIn IROS, 2025

Boosting Omnidirectional Stereo Matching with a Pre-trained Depth Foundation ModelJannik Endres, Oliver Hahn, Charles Corbière, Simone Schaub-Meyer, Stefan Roth, and Alexandre AlahiIn IROS, 2025Omnidirectional depth perception is essential for mobile robotics applications that require scene understanding across a full 360° field of view. Camera-based setups offer a cost-effective option by using stereo depth estimation to generate dense, high-resolution depth maps without relying on expensive active sensing. However, existing omnidirectional stereo matching approaches achieve only limited depth accuracy across diverse environments, depth ranges, and lighting conditions, due to the scarcity of real-world data. We present DFI-OmniStereo, a novel omnidirectional stereo matching method that leverages a large-scale pre-trained foundation model for relative monocular depth estimation within an iterative optimization-based stereo matching architecture. We introduce a dedicated twostage training strategy to utilize the relative monocular depth features for our omnidirectional stereo matching before scaleinvariant fine-tuning. DFI-OmniStereo achieves state-of-the-art results on the real-world Helvipad dataset, reducing disparity MAE by approximately 16% compared to the previous best omnidirectional stereo method.

@inproceedings{dfiomnistereo2025, title = {Boosting Omnidirectional Stereo Matching with a Pre-trained Depth Foundation Model}, author = {Endres, Jannik and Hahn, Oliver and Corbière, Charles and Schaub-Meyer, Simone and Roth, Stefan and Alahi, Alexandre}, booktitle = {IROS}, year = {2025}, } -

Helvipad: A Real-World Dataset for Omnidirectional Stereo Depth EstimationMehdi Zayene, Jannik Endres, Albias Havolli, Charles Corbière, Salim Cherkaoui, Alexandre Kontouli, and Alexandre AlahiIn CVPR, 2025

Helvipad: A Real-World Dataset for Omnidirectional Stereo Depth EstimationMehdi Zayene, Jannik Endres, Albias Havolli, Charles Corbière, Salim Cherkaoui, Alexandre Kontouli, and Alexandre AlahiIn CVPR, 2025Despite considerable progress in stereo depth estimation, omnidirectional imaging remains underexplored, mainly due to the lack of appropriate data. We introduce HELVIPAD, a real-world dataset for omnidirectional stereo depth estimation, consisting of 40K frames from video sequences across diverse environments, including crowded indoor and outdoor scenes with diverse lighting conditions. Collected using two 360° cameras in a top-bottom setup and a LiDAR sensor, the dataset includes accurate depth and disparity labels by projecting 3D point clouds onto equirectangular images. Additionally, we provide an augmented training set with a significantly increased label density by using depth completion. We benchmark leading stereo depth estimation models for both standard and omnidirectional images. The results show that while recent stereo methods perform decently, a significant challenge persists in accurately estimating depth in omnidirectional imaging. To address this, we introduce necessary adaptations to stereo models, achieving improved performance.

@inproceedings{zayene2024helvipad, title = {Helvipad: A Real-World Dataset for Omnidirectional Stereo Depth Estimation}, author = {Zayene, Mehdi and Endres, Jannik and Havolli, Albias and Corbière, Charles and Cherkaoui, Salim and Kontouli, Alexandre and Alahi, Alexandre}, year = {2025}, booktitle = {CVPR}, }

2022

-

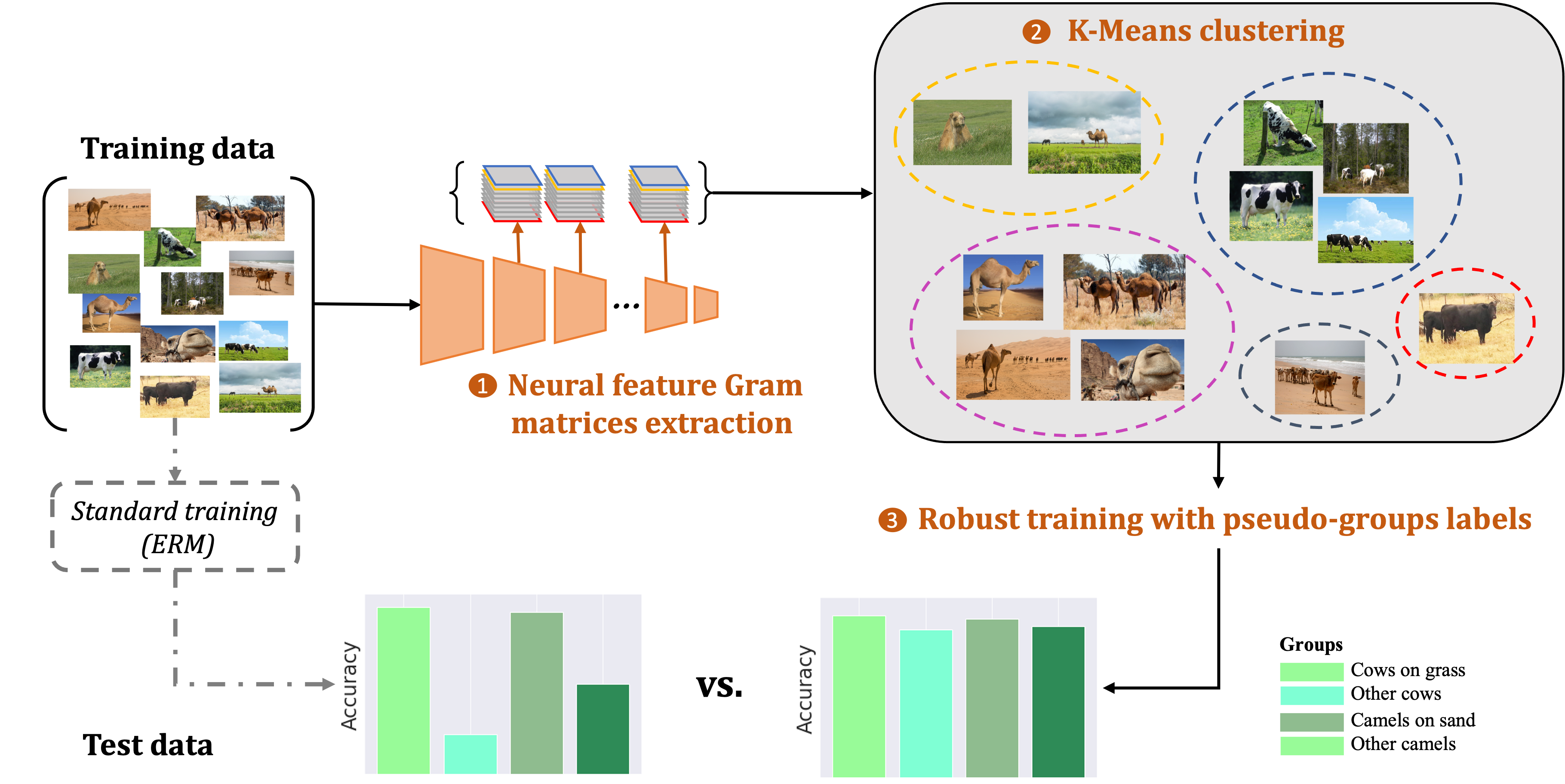

Take One Gram of Neural Features, Get Enhanced Group RobustnessSimon Roburin*, Charles Corbière*, Gilles Puy, Nicolas Thome, Mathieu Aubry, Renaud Marlet, and Patrick PérezIn ECCV Workshop on Out-Of-Distribution Generalization in Computer Vision, 2022

Take One Gram of Neural Features, Get Enhanced Group RobustnessSimon Roburin*, Charles Corbière*, Gilles Puy, Nicolas Thome, Mathieu Aubry, Renaud Marlet, and Patrick PérezIn ECCV Workshop on Out-Of-Distribution Generalization in Computer Vision, 2022Predictive performance of machine learning models trained with empirical risk minimization (ERM) can degrade considerably under distribution shifts. In particular, the presence of spurious correlations in training datasets leads ERM-trained models to display high loss when evaluated on minority groups not presenting such correlations in test sets. Extensive attempts have been made to develop methods improving worst-group robustness. However, they require group information for each training input or at least, a validation set with group labels to tune their hyperparameters, which may be expensive to get or unknown a priori. In this paper, we address the challenge of improving group robustness without group annotations during training. To this end, we propose to partition automatically the training dataset into groups based on Gram matrices of features extracted from an identification model and to apply robust optimization based on these pseudo-groups. In the realistic context where no group labels are available, our experiments show that our approach not only improves group robustness over ERM but also outperforms all recent baselines.

@inproceedings{roburinECCV2022, title = {Take One Gram of Neural Features, Get Enhanced Group Robustness}, author = {Roburin, Simon and Corbière, Charles and Puy, Gilles and Thome, Nicolas and Aubry, Mathieu and Marlet, Renaud and P\'{e}rez, Patrick}, booktitle = {ECCV Workshop on Out-Of-Distribution Generalization in Computer Vision}, year = {2022}, }

2021

-

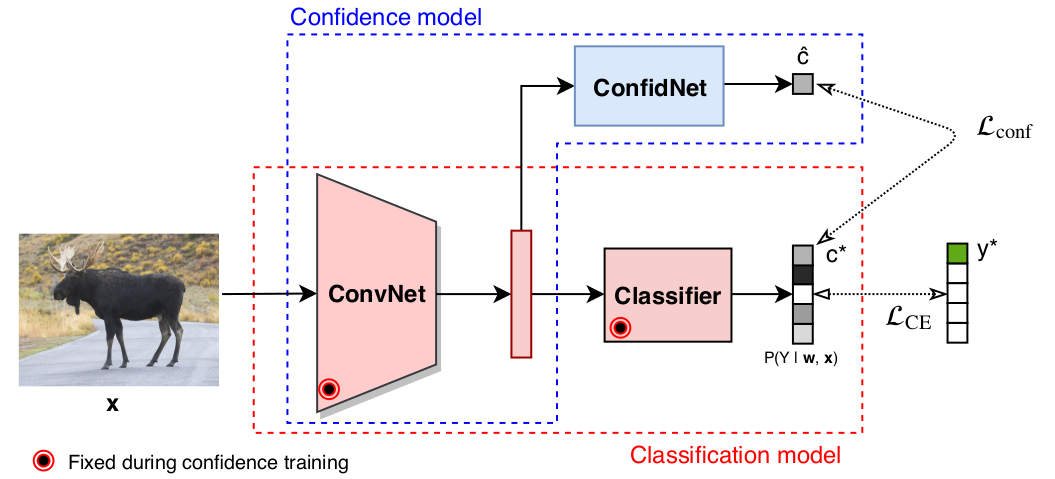

Confidence Estimation via Auxiliary ModelsCharles Corbière, Nicolas Thome, Antoine Saporta, Tuan-HUng Vu, Mathieu Cord, and Patrick PérezIn IEEE T-PAMI, 2021

Confidence Estimation via Auxiliary ModelsCharles Corbière, Nicolas Thome, Antoine Saporta, Tuan-HUng Vu, Mathieu Cord, and Patrick PérezIn IEEE T-PAMI, 2021Reliably quantifying the confidence of deep neural classifiers is a challenging yet fundamental requirement for deploying such models in safety-critical applications. In this paper, we introduce a novel target criterion for model confidence, namely the true class probability (TCP). We show that TCP offers better properties for confidence estimation than standard maximum class probability (MCP). Since the true class is by essence unknown at test time, we propose to learn TCP criterion from data with an auxiliary model, introducing a specific learning scheme adapted to this context. We evaluate our approach on the task of failure prediction and of self-training with pseudo-labels for domain adaptation, which both necessitate effective confidence estimates. Extensive experiments are conducted for validating the relevance of the proposed approach in each task. We study various network architectures and experiment with small and large datasets for image classification and semantic segmentation. In every tested benchmark, our approach outperforms strong baselines.

@inproceedings{corbiere2020, title = {Confidence Estimation via Auxiliary Models}, author = {Corbière, Charles and Thome, Nicolas and Saporta, Antoine and Vu, Tuan-HUng and Cord, Mathieu and P{\'e}rez, Patrick}, year = {2021}, booktitle = {IEEE T-PAMI}, } -

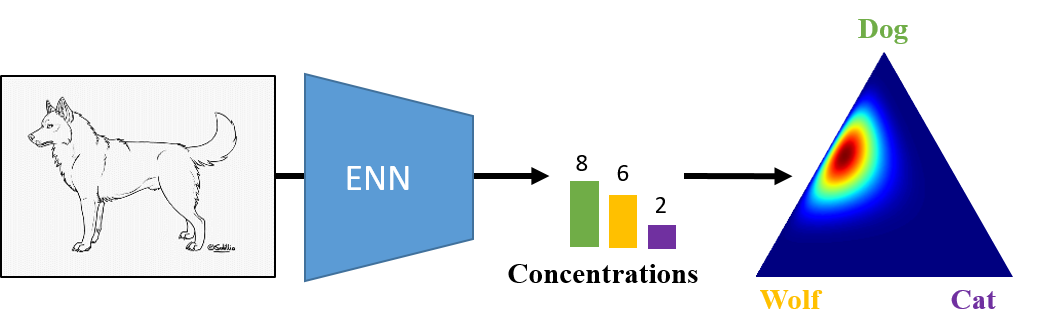

Beyond First-Order Uncertainty Estimation with Evidential Models for Open-World RecognitionCharles Corbière, Marc Lafon, Nicolas Thome, Matthieu Cord, and Patrick PérezIn ICML Workshop on Uncertainty and Robustness in Deep Learning, 2021

Beyond First-Order Uncertainty Estimation with Evidential Models for Open-World RecognitionCharles Corbière, Marc Lafon, Nicolas Thome, Matthieu Cord, and Patrick PérezIn ICML Workshop on Uncertainty and Robustness in Deep Learning, 2021In this paper, we tackle the challenge of jointly quantifying in-distribution and out-of-distribution (OOD) uncertainties. We introduce KLoS, a KL-divergence measure defined on the class-probability simplex. By leveraging the second-order uncertainty representation provided by evidential models, KLoS captures more than existing first-order uncertainty measures such as predictive entropy. We design an auxiliary neural network, KLoSNet, to learn a refined measure directly aligned with the evidential training objective. Experiments show that KLoSNet acts as a class-wise density estimator and outperforms current uncertainty measures in the realistic context where no OOD data is available during training. We also report comparisons in the presence of OOD training samples, which shed a new light on the impact of the vicinity of this data with OOD test data.

@inproceedings{corbiereUDL2021, title = {Beyond First-Order Uncertainty Estimation with Evidential Models for Open-World Recognition}, author = {Corbière, Charles and Lafon, Marc and Thome, Nicolas and Cord, Matthieu and P\'{e}rez, Patrick}, booktitle = {ICML Workshop on Uncertainty and Robustness in Deep Learning}, year = {2021}, }

2019

-

Addressing Failure Prediction by Learning Model ConfidenceCharles Corbière, Nicolas Thome, Avner Bar-Hen, Matthieu Cord, and Patrick PérezIn NeurIPS, 2019

Addressing Failure Prediction by Learning Model ConfidenceCharles Corbière, Nicolas Thome, Avner Bar-Hen, Matthieu Cord, and Patrick PérezIn NeurIPS, 2019Assessing reliably the confidence of a deep neural network and predicting its failures is of primary importance for the practical deployment of these models. In this paper, we propose a new target criterion for model confidence, corresponding to the True Class Probability (TCP). We show how using the TCP is more suited than relying on the classic Maximum Class Probability (MCP). We provide in addition theoretical guarantees for TCP in the context of failure prediction. Since the true class is by essence unknown at test time, we propose to learn TCP criterion on the training set, introducing a specific learning scheme adapted to this context. Extensive experiments are conducted for validating the relevance of the proposed approach. We study various network architectures, small and large scale datasets for image classification and semantic segmentation. We show that our approach consistently outperforms several strong methods, from MCP to Bayesian uncertainty, as well as recent approaches specifically designed for failure prediction.

@inproceedings{NIPS2019_8556, title = {Addressing Failure Prediction by Learning Model Confidence}, author = {Corbière, Charles and Thome, Nicolas and Bar-Hen, Avner and Cord, Matthieu and P\'{e}rez, Patrick}, booktitle = {NeurIPS}, pages = {2902--2913}, year = {2019}, }

2017

-

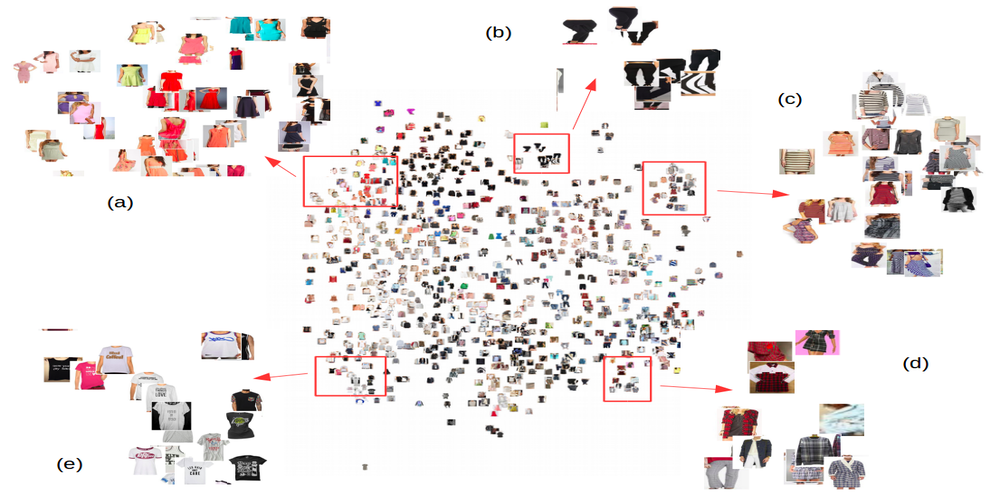

Leveraging Weakly Annotated Data for Fashion Image Retrieval and Label PredictionCharles Corbière, Hedi Ben-Younes, Alexandre Rame, and Charles OllionIn ICCV Fashion Workshop, 2017

Leveraging Weakly Annotated Data for Fashion Image Retrieval and Label PredictionCharles Corbière, Hedi Ben-Younes, Alexandre Rame, and Charles OllionIn ICCV Fashion Workshop, 2017In this paper, we present a method to learn a visual representation adapted for e-commerce products. Based on weakly supervised learning, our model learns from noisy datasets crawled on e-commerce website catalogs and does not require any manual labeling. We show that our representation can be used for downward classification tasks over clothing categories with different levels of granularity. We also demonstrate that the learnt representation is suitable for image retrieval. We achieve nearly state-of-art results on the DeepFashion In-Shop Clothes Retrieval and Categories Attributes Prediction tasks, without using the provided training set.

@inproceedings{leveraging_iccvw, title = {Leveraging Weakly Annotated Data for Fashion Image Retrieval and Label Prediction}, author = {Corbière, Charles and Ben-Younes, Hedi and Rame, Alexandre and Ollion, Charles}, year = {2017}, booktitle = {ICCV Fashion Workshop}, }